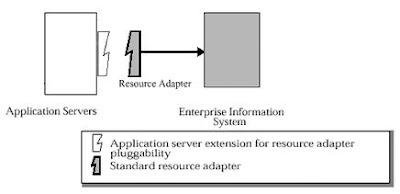

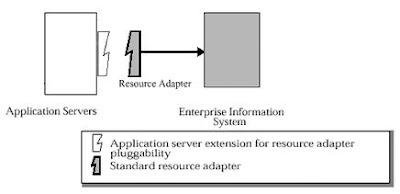

Connectors as the name specifies, are nothing but the way of connecting to different components of an enterprise infrastructure more like an USB (plug and play) components. And the specification it adheares to is the Java Connector Architecture (JCA).

The J2EE Connector Architecture defines standard Java interfaces for simplifying the integration of enterprise applications with J2EE-based Java applications. With these interfaces, Java developers can access existing databases, ERP applications and legacy systems. The connector, also known as a "resource adapter," appears as a component library that the developer can access via Java programming language.

The JCA has two basic components, the Common Client Interface (CCI) and a set of system-specific services. An adapter developer provides an interface to CCI along with its side of the system contracts specified as part of the connector architecture. The application server vendor implements its side of the system contracts as part of its base J2EE platform.

CCI is a programming interface that application developers and client programs can use to connect and access back-end systems. It is a low-level API and similar to JDBC. Unlike JDBC, however, CCI can work with nonrelational systems. CCI manages the flow of data between the application and the back-end system and does not have any visibility into what the container and the application server are doing. Although it is possible for application developers to call the CCI directly, in most cases, an application developer will write to an abstraction layer, provided by the connector provider or enterprise application integration (EAI) framework vendor, to simplify the development process.

On the platform side, JCA defines a set of service contracts that a connector developer can expect will be available to the adapter at application runtime. Like CCI, the specification defines what services need to be present, and again, it is up to the application server vendor to provide the actual implementation.

To enable seamless integration with an application server, a resource adapter must abide by guidelines, known as system-level contracts. These contracts specify how a system external to the J2EE platform can integrate with it by supporting basic functions that are handled by the J2EE container. There are three major categories of these functions:

Connection managementThe connection management contract allows applications to connect to an EIS. It also enables the application server to utilize pooling.

Transaction managementThe transaction management contact allows an application to manage and perform transactional access across one to many EIS resource managers.

SecurityThe security contract provides support for secure access to the EIS.

How to build your own adapterIn this post we will try to demonstrate how to implement a JCA adapter - a set of classes with which a J2EE application server targets a particular enterprise system. A JCA adapter functions similarly to how a JDBC driver connects to databases. Developing a full-featured JCA adapter is a complex task. However, by the end, you will understand a basic JCA adapter's construction, and grasp the effort required to build your own.

This sample adapter will actually connect to an enterprise system. It only implements (for demonstration purpose) the interfaces required to deploy an adapter and look up a connection. (And we are not going to use Common Client Interfaces [CCI] either).

The adapter includes two class categories:

Managed classes: The application server calls managed classes to perform the connection management, required only if the application server is managing the connection through a connection pool.

Physical connection classes: These classes can get called by the managed classes to establish the connection to an EIS (enterprise information systems).

MyManagedConnectionFactoryWith the MyManagedConnectionFactory class, which implements the ManagedConnectionFacytory interface, you create the MyConnectionFactory and MyManagedConnection classes. The MyManagedConnectionFactory class is the main entry point for the application server to invoke the adapter:

package org.acme.jca;

import java.io.PrintWriter;

import java.io.Serializable;

import java.sql.DriverManager;

import java.util.Iterator;

import java.util.Set;

import javax.resource.ResourceException;

import javax.resource.spi.*;

import javax.security.auth.Subject;

public class MyManagedConnectionFactory

implements ManagedConnectionFactory, Serializable

{

public MyManagedConnectionFactory() {

System.out.println("In MyManagedConnectionFactory.constructor");

}

public Object createConnectionFactory(ConnectionManager cxManager) throws ResourceException {

System.out.println("In MyManagedConnectionFactory.createConnectionFactory,1");

return new MyDataSource(this, cxManager);

}

public Object createConnectionFactory() throws ResourceException {

System.out.println("In MyManagedConnectionFactory.createManagedFactory,2");

return new MyDataSource(this, null);

}

public ManagedConnection createManagedConnection(Subject subject, ConnectionRequestInfo info) {

System.out.println("In MyManagedConnectionFactory.createManagedConnection");

return new MyManagedConnection(this, "test");

}

public ManagedConnection matchManagedConnections(Set connectionSet, Subject subject, ConnectionRequestInfo info)

throws ResourceException

{

System.out.println("In MyManagedConnectionFactory.matchManagedConnections");

return null;

}

public void setLogWriter(PrintWriter out) throws ResourceException {

System.out.println("In MyManagedConnectionFactory.setLogWriter");

}

public PrintWriter getLogWriter() throws ResourceException {

System.out.println("In MyManagedConnectionFactory.getLogWriter");

return DriverManager.getLogWriter();

}

public boolean equals(Object obj) {

if(obj == null)

return false;

if(obj instanceof MyManagedConnectionFactory)

{

int hash1 = ((MyManagedConnectionFactory)obj).hashCode();

int hash2 = hashCode();

return hash1 == hash2;

}

else

{

return false;

}

}

public int hashCode()

{

return 1;

}

}

MyManagedConnectionThe MyManagedConnection class implements the ManagedConnection interface. MyManagedConnection encapsulates the adapter's physical connection, in this case the MyConnection class:

package org.acme.jca;

import java.io.PrintWriter;

import java.sql.Connection;

import java.sql.SQLException;

import java.util.*;

import javax.resource.NotSupportedException;

import javax.resource.ResourceException;

import javax.resource.spi.*;

import javax.security.auth.Subject;

import javax.transaction.xa.XAResource;

public class MyManagedConnection

implements ManagedConnection

{

private MyConnectionEventListener myListener;

private String user;

private ManagedConnectionFactory mcf;

private PrintWriter logWriter;

private boolean destroyed;

private Set connectionSet;

MyManagedConnection(ManagedConnectionFactory mcf, String user)

{

System.out.println("In MyManagedConnection");

this.mcf = mcf;

this.user = user;

connectionSet = new HashSet();

myListener = new MyConnectionEventListener(this);

}

private void throwResourceException(SQLException ex)

throws ResourceException

{

ResourceException re = new ResourceException("SQLException: " +

ex.getMessage());

re.setLinkedException(ex);

throw re;

}

public Object getConnection(Subject subject, ConnectionRequestInfo

connectionRequestInfo)

throws ResourceException

{

System.out.println("In MyManagedConnection.getConnection");

MyConnection myCon = new MyConnection(this);

addMyConnection(myCon);

return myCon;

}

public void destroy()

{

System.out.println("In MyManagedConnection.destroy");

destroyed = true;

}

public void cleanup()

{

System.out.println("In MyManagedConnection.cleanup");

}

public void associateConnection(Object connection)

{

System.out.println("In MyManagedConnection.associateConnection");

}

public void addConnectionEventListener(ConnectionEventListener listener)

{

System.out.println("In MyManagedConnection.addConnectionEventListener");

myListener.addConnectorListener(listener);

}

public void removeConnectionEventListener(ConnectionEventListener listener)

{

System.out.println("In MyManagedConnection.removeConnectionEventListener");

myListener.removeConnectorListener(listener);

}

public XAResource getXAResource()

throws ResourceException

{

System.out.println("In MyManagedConnection.getXAResource");

return null;

}

public LocalTransaction getLocalTransaction()

{

System.out.println("In MyManagedConnection.getLocalTransaction");

return null;

}

public ManagedConnectionMetaData getMetaData()

throws ResourceException

{

System.out.println("In MyManagedConnection.getMetaData");

return new MyConnectionMetaData(this);

}

public void setLogWriter(PrintWriter out)

throws ResourceException

{

System.out.println("In MyManagedConnection.setLogWriter");

logWriter = out;

}

public PrintWriter getLogWriter()

throws ResourceException

{

System.out.println("In MyManagedConnection.getLogWriter");

return logWriter;

}

Connection getMyConnection()

throws ResourceException

{

System.out.println("In MyManagedConnection.getMyConnection");

return null;

}

boolean isDestroyed()

{

System.out.println("In MyManagedConnection.isDestroyed");

return destroyed;

}

String getUserName()

{

System.out.println("In MyManagedConnection.getUserName");

return user;

}

void sendEvent(int eventType, Exception ex)

{

System.out.println("In MyManagedConnection.sendEvent,1");

myListener.sendEvent(eventType, ex, null);

}

void sendEvent(int eventType, Exception ex, Object connectionHandle)

{

System.out.println("In MyManagedConnection.sendEvent,2 ");

myListener.sendEvent(eventType, ex, connectionHandle);

}

void removeMyConnection(MyConnection myCon)

{

System.out.println("In MyManagedConnection.removeMyConnection");

connectionSet.remove(myCon);

}

void addMyConnection(MyConnection myCon)

{

System.out.println("In MyManagedConnection.addMyConnection");

connectionSet.add(myCon);

}

ManagedConnectionFactory getManagedConnectionFactory()

{

System.out.println("In MyManagedConnection.getManagedConnectionFactory");

return mcf;

}

}

MyConnectionEventListenerFor its part, the MyConnectionEventListener class allows the application server to register callbacks for the adapter. The application server can then perform operations, connection-pool maintenance, for example, based on the connection state:

package org.acme.jca;

import java.util.Vector;

import javax.resource.spi.ConnectionEvent;

import javax.resource.spi.ConnectionEventListener;

import javax.resource.spi.ManagedConnection;

public class MyConnectionEventListener

implements javax.sql.ConnectionEventListener

{

private Vector listeners;

private ManagedConnection mcon;

public MyConnectionEventListener(ManagedConnection mcon)

{

System.out.println("In MyConnectionEventListener");

this.mcon = mcon;

}

public void sendEvent(int eventType, Exception ex, Object connectionHandle)

{

System.out.println("In MyConnectionEventListener.sendEvent");

}

public void addConnectorListener(ConnectionEventListener l)

{

System.out.println("In MyConnectionEventListener.addConnectorListener");

}

public void removeConnectorListener(ConnectionEventListener l)

{

System.out.println("In MyConnectionEventListener.removeConnectorListener");

}

public void connectionClosed(javax.sql.ConnectionEvent connectionevent)

{

System.out.println("In MyConnectionEventListener.connectorClosed");

}

public void connectionErrorOccurred(javax.sql.ConnectionEvent event)

{

System.out.println("In MyConnectionEventListener.connectorErrorOccurred");

}

}

MyConnectionMetaDataThe MyConnectionMetaData class provides meta information -- product name, the maximum number of connections allowed, and so on -- regarding the managed connection and the underlying physical connection class:

package org.acme.jca;

import javax.resource.ResourceException;

import javax.resource.spi.*;

public class MyConnectionMetaData

implements ManagedConnectionMetaData

{

private MyManagedConnection mc;

public MyConnectionMetaData(MyManagedConnection mc)

{

System.out.println("In MyConnectionMetaData.constructor");

this.mc = mc;

}

public String getEISProductName()

throws ResourceException

{

System.out.println("In MyConnectionMetaData.getEISProductName");

return "myJCA";

}

public String getEISProductVersion()

throws ResourceException

{

System.out.println("In MyConnectionMetaData.getEISProductVersion");

return "1.0";

}

public int getMaxConnections()

throws ResourceException

{

System.out.println("In MyConnectionMetaData.getMaxConnections");

return 5;

}

public String getUserName()

throws ResourceException

{

return mc.getUserName();

}

}

MyConnectionThe MyConnection class, which represents the underlying physical connection to the EIS. MyConnection is one of the few classes that does not implement an interface in the JCA specification. The implementation below is simplistic, but a working implementation might contain connectivity code using sockets, as well as other functionality:

package org.acme.jca;

public class MyConnection

{

private MyManagedConnection mc;

public MyConnection(MyManagedConnection mc)

{

System.out.println("In MyConnection");

this.mc = mc;

}

}

MyConnectionRequestInfoThe MyConnectionRequestInfo class contains the data (such as the user name, password, and other information) necessary to establish a connection:

package org.acme.jca;

import javax.resource.spi.ConnectionRequestInfo;

public class MyConnectionRequestInfo

implements ConnectionRequestInfo

{

private String user;

private String password;

public MyConnectionRequestInfo(String user, String password)

{

System.out.println("In MyConnectionRequestInfo");

this.user = user;

this.password = password;

}

public String getUser()

{

System.out.println("In MyConnectionRequestInfo.getUser");

return user;

}

public String getPassword()

{

System.out.println("In MyConnectionRequestInfo.getPassword");

return password;

}

public boolean equals(Object obj)

{

System.out.println("In MyConnectionRequestInfo.equals");

if(obj == null)

return false;

if(obj instanceof MyConnectionRequestInfo)

{

MyConnectionRequestInfo other = (MyConnectionRequestInfo)obj;

return isEqual(user, other.user) && isEqual(password, other.password);

} else

{

return false;

}

}

public int hashCode()

{

System.out.println("In MyConnectionRequestInfo.hashCode");

String result = "" + user + password;

return result.hashCode();

}

private boolean isEqual(Object o1, Object o2)

{

System.out.println("In MyConnectionRequestInfo.isEqual");

if(o1 == null)

return o2 == null;

else

return o1.equals(o2);

}

}

MyDataSourceThe MyDataSource class serves as a connection factory for the underlying connections. Because the sample adapter does not implement the CCI interfaces, it implements the DataSource interface in the javax.sql package:

package org.acme.jca;

import java.io.PrintWriter;

import java.io.Serializable;

import java.sql.*;

import javax.naming.Reference;

import javax.resource.Referenceable;

import javax.resource.ResourceException;

import javax.resource.spi.ConnectionManager;

import javax.resource.spi.ManagedConnectionFactory;

import javax.sql.DataSource;

public class MyDataSource

implements DataSource, Serializable, Referenceable

{

private String desc;

private ManagedConnectionFactory mcf;

private ConnectionManager cm;

private Reference reference;

public MyDataSource(ManagedConnectionFactory mcf, ConnectionManager cm)

{

System.out.println("In MyDataSource");

this.mcf = mcf;

if(cm == null)

this.cm = new MyConnectionManager();

else

this.cm = cm;

}

public Connection getConnection()

throws SQLException

{

System.out.println("In MyDataSource.getConnection,1");

try

{

return (Connection)cm.allocateConnection(mcf, null);

}

catch(ResourceException ex)

{

throw new SQLException(ex.getMessage());

}

}

public Connection getConnection(String username, String password)

throws SQLException

{

System.out.println("In MyDataSource.getConnection,2");

try

{

javax.resource.spi.ConnectionRequestInfo info = new MyConnectionRequestInfo(username, password);

return (Connection)cm.allocateConnection(mcf, info);

}

catch(ResourceException ex)

{

throw new SQLException(ex.getMessage());

}

}

public int getLoginTimeout()

throws SQLException

{

return DriverManager.getLoginTimeout();

}

public void setLoginTimeout(int seconds)

throws SQLException

{

DriverManager.setLoginTimeout(seconds);

}

public PrintWriter getLogWriter()

throws SQLException

{

return DriverManager.getLogWriter();

}

public void setLogWriter(PrintWriter out)

throws SQLException

{

DriverManager.setLogWriter(out);

}

public String getDescription()

{

return desc;

}

public void setDescription(String desc)

{

this.desc = desc;

}

public void setReference(Reference reference)

{

this.reference = reference;

}

public Reference getReference()

{

return reference;

}

}

Now compile and build testjca.rar

Now that you've seen the adapter' source code, it's time to build the testjca.rar file. First, I assume you have a source directory containing two subdirectories: testjca containing the .java files, and META-INF containing the configuration files.

To compile and build the rar file:

1. Compile the class files by typing javac *.java in the testjca directory

2. Build the testjca.jar from the source directory by entering jar cvf testjca.jar myjca

3. Create the rar file using the testjca.jar and the META-INF directory by typing jar cvf testjca.rar myjca.jar META-INF

Deployment output

Once you deploy the adapter rar file, you should see the output of the println statements contained in most of the adapter's methods. You should see output similar to the following as the adapter deploys:

In MyManagedConnectionFactory.constructor

In MyManagedConnectionFactory.createManagedConnection

In MyManagedConnection

In MyConnectionEventListener

In MyManagedConnection.getMetaData

In MyConnectionMetaData.constructor

In MyConnectionMetaData.getEISProductName

In MyConnectionMetaData.getEISProductVersion

In MyConnectionMetaData.getMaxConnections

The output above shows the ManagedConnectionFactory's creation, which then invoked the ManagedConnection, which in turn created the ConnectionEventListener. Finally, you see that the application server called the ConnectionMetaData.

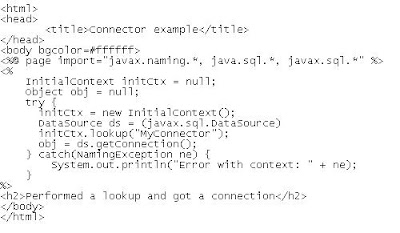

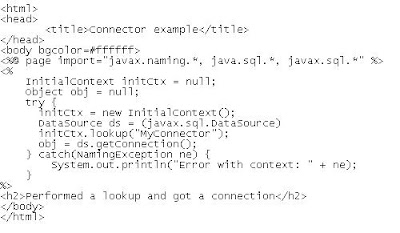

Get a connection

Now that you've deployed the adapter successfully, let's use the adapter to obtain a connection. The following JSP (JavaServer Pages) file does just that, by looking up the connection using JNDI (Java Naming and Directory Interface), then calling the getConnection() method on the DataSource:

You'll see the following output when the adapter acquires the connection:

In MyManagedConnectionFactory.createConnectionFactory,1

In MyDataSource

In MyDataSource.getConnection,1

In MyManagedConnectionFactory.matchManagedConnections

In MyManagedConnectionFactory.createManagedConnection

In MyManagedConnection

In MyConnectionEventListener

In MyManagedConnection.getMetaData

In MyConnectionMetaData.constructor

In MyConnectionMetaData.getEISProductName

In MyConnectionMetaData.getEISProductVersion

In MyConnectionMetaData.getMaxConnections

In MyManagedConnection.getUserName

In MyManagedConnection.getConnection

In MyConnection

In MyManagedConnection.addMyConnection

In MyManagedConnection.addConnectionEventListener

If you are able to see the mentioned output, now you are ready to build your own connector.

Pic: Synchronous webservice with JAX-RPC

Pic: Synchronous webservice with JAX-RPC